Osm

OpenStreetmap container that contains a postgresql cartography database of the planet, the renderd daemon and an apache2 webserver to serve rendered tiles.

Cartography pipeline

postgresql

Data storage, mostly in planet_osm_* tables, but some smaller tables for e.g. coastlines and contours for the /contours/ layer, all in dbname=gis.

Filesystem

The filesystem /mnt/dbp is a separate zfs dataset, and includes some sub-datasets of non-database files, but that are still relevant to the cartography.

The database has a symlink /var/lib/postgresql/12 -> /mnt/dbp/12 and stores all its data there.

Previously, that was using zstd-19 compression, reaching a 3.77x compression ratio (lz4 attained about 2.5x, and gzip-9 3.5x, the additional CPU load is worth the disk space headroom), and using about 560 GB of disk space. This was slightly sub-optimal because I acquired some more SSD space and now a compression level of zstd-8 (concluded from a few empiric rendering benchmarks) is enough to not waste too much CPU cycles on compression/decompression. With that, the database alone uses 678GB.

The sub-dataset /mnt/dbp/maps stores database-adjacent files from openstreetmap and for osm2pgsql.

- To allow regular updates,

/mnt/dbp/maps/planet.bin.nodesis a binary file that also needs to be saved though it is not used for rendering; it uses about 50 GB. - The directory

/mnt/dbp/maps/tmpstores .osc changefiles that have not-yet been imported to the database, there is a scheduled task that imports and upon success removes those. - The file

/mnt/dbp/maps/state.txtsaves the stateNumber up to which all .osc files have either been downloaded or even imported.

This structure allows for a recursive zfs snapshot to represent a consistent state of the database.

The sub-dataset /mnt/dbp/tiles stores the cache for all the rendered tiles.

How to import planet.osm.pbf

The import process may overuse memory and crash the server. To mitigate this, the following was used (though only as long as nodes+ways+rels were processed until 2022-04-05 08:35, not at the database operations)

$ while true;do while [ "$(free --giga|grep Mem|xargs|cut -d' ' -f7)" -gt 2 ];do printf '\033[2K\r%s' "$(free --giga|grep Mem|xargs|cut -d' ' -f7)";sleep 1;done;sudo kill -9 "$(pidof osm2pgsql)";free -h;sleep 5;free -h;doneThis loop did not engage once, but the import would consistently crash the server before. Maybe just a happy coincidence, this time.

Also, it seemed that limiting CPU usage helped mitigate RAM overuse:

$ cpulimit -fl 400 -- osm2pgsql --create --multi-geometry --slim --hstore --style openstreetmap-with-more.style --tag-transform-script openstreetmap-adjusted-for-cyclosm.lua -d gis -C 14000 --number-processes 4 --flat-nodes /mnt/maps/planet.bin.nodes /mnt/maps/planet-220328.osm.pbf

Process 835 detected

2022-04-04 12:16:47 osm2pgsql version 1.5.2 (1.5.2-15-g25a1e9d1)

2022-04-04 12:16:47 Database version: 12.10 (Ubuntu 12.10-1.pgdg20.04+1)

2022-04-04 12:16:47 PostGIS version: 3.2

2022-04-04 12:16:47 Setting up table 'planet_osm_point'

2022-04-04 12:16:47 Setting up table 'planet_osm_line'

2022-04-04 12:16:47 Setting up table 'planet_osm_polygon'

2022-04-04 12:16:48 Setting up table 'planet_osm_roads'

Processing: Node(1640k 546.7k/s) Way(0k 0.00k/s) Relation(0 0.0/s)

Processing: Node(1002480k 1842.8k/s) Way(0k 0.00k/s) Relation(0 0.0/s)

Processing: Node(4575560k 1902.5k/s) Way(0k 0.00k/s) Relation(0 0.0/s)

Processing: Node(7587737k 1861.6k/s) Way(91455k 10.28k/s) Relation(0 0.0/s)

Processing: Node(7587737k 1861.6k/s) Way(262832k 13.34k/s) Relation(0 0.0/s)

Processing: Node(7587737k 1861.6k/s) Way(847173k 18.33k/s) Relation(1786720 216.9/s)

2022-04-05 08:35:27 Reading input files done in 73119s (20h 18m 39s).

2022-04-05 08:35:27 Processed 7587737772 nodes in 4076s (1h 7m 56s) - 1862k/s

2022-04-05 08:35:27 Processed 847173340 ways in 46225s (12h 50m 25s) - 18k/s

2022-04-05 08:35:27 Processed 9773277 relations in 22818s (6h 20m 18s) - 428/s

2022-04-05 08:35:28 Clustering table 'planet_osm_point' by geometry...

2022-04-05 08:35:28 Clustering table 'planet_osm_polygon' by geometry...

2022-04-05 08:35:28 Clustering table 'planet_osm_line' by geometry...

2022-04-05 08:35:28 Clustering table 'planet_osm_roads' by geometry...

2022-04-05 10:22:51 Creating geometry index on table 'planet_osm_roads'...

2022-04-05 10:50:28 Creating osm_id index on table 'planet_osm_roads'...

2022-04-05 10:56:22 Creating geometry index on table 'planet_osm_point'...

2022-04-05 10:58:21 Analyzing table 'planet_osm_roads'...

2022-04-05 10:58:34 Done postprocessing on table 'planet_osm_nodes' in 0s

2022-04-05 10:58:34 Building index on table 'planet_osm_ways'

2022-04-05 13:38:32 Creating osm_id index on table 'planet_osm_point'...

2022-04-05 13:55:29 Analyzing table 'planet_osm_point'...

2022-04-05 13:55:45 Building index on table 'planet_osm_rels'

2022-04-05 15:38:19 Creating geometry index on table 'planet_osm_line'...

2022-04-05 21:40:06 Creating osm_id index on table 'planet_osm_line'...

2022-04-05 22:12:14 Analyzing table 'planet_osm_line'...

2022-04-06 00:58:09 Creating geometry index on table 'planet_osm_polygon'...

2022-04-06 18:13:34 Creating osm_id index on table 'planet_osm_polygon'...

2022-04-06 18:55:31 Analyzing table 'planet_osm_polygon'...

2022-04-07 10:36:05 Done postprocessing on table 'planet_osm_ways' in 171451s (47h 37m 31s)

2022-04-07 10:36:05 Done postprocessing on table 'planet_osm_rels' in 1214s (20m 14s)

2022-04-07 10:36:05 All postprocessing on table 'planet_osm_point' done in 19216s (5h 20m 16s).

2022-04-07 10:36:05 All postprocessing on table 'planet_osm_line' done in 49019s (13h 36m 59s).

2022-04-07 10:36:05 All postprocessing on table 'planet_osm_polygon' done in 123615s (34h 20m 15s).

2022-04-07 10:36:05 All postprocessing on table 'planet_osm_roads' done in 8586s (2h 23m 6s).

2022-04-07 10:36:05 osm2pgsql took 253158s (70h 19m 18s) overall.

Child process is finished, exiting...Reconciliation of database schemas for rendering of two layer types

Layers /plain*/ and /cyclosm/ differ on their assumptions of the database schema. These assumptions are specified at import time to osm2pgsql. The details about which columns are extracted from planet.osm.pbf and how they are converted, are specified by --style and --tag-transform-script, the current files are: [osm2pgsql] --style openstreetmap-with-more.style --tag-transform-script openstreetmap-adjusted-for-cyclosm.lua. The .style file specifies which columns to take into the database. To unite both layers, it changes some columns from the /plain*/ layers' .style to apply not only to nodes but nodes,ways and adds a few new columns specific to /cyclosm/. There was one explicit conflict (the items before were only extending data to cover both layer types plain* and cyclosm): the layer column, regarding its type. The cyclosm mapnik.xml stylesheet only mentions the column four times and assumes it is text, whereas /plain*/ takes it as int4(and mentions it much more). Cyclosm actually converts layer to integer, so only a few manual edits, to remove type casts, in its stylesheet and views.sql (the SQL indexes builder) were necessary:

(cyclosm stylesheet.xml)

CASE WHEN layer~E'^\\d+$' THEN layer::integer ELSE 0 ENDreplaced by

CASE WHEN layer IS NOT NULL THEN layer ELSE 0 ENDand (views.sql)

CASE WHEN layer~E'^\\d+$' THEN 100*layer::integer+199 ELSE 199 ENDreplaced by

CASE WHEN layer IS NOT NULL THEN 100*layer+199 ELSE 199 ENDrenderd

Previous rendering daemon, the config file at /usr/local/etc/renderd.conf specifies which layers exist and their stylesheets. Uses the mapnik renderer, accessed as a python module.

Tirex

Current rendering daemon. It still uses mapnik to actually render but has a few advantages over the default renderd: one is more configurability for prioritization. But the main point is, it seems to not have a memory leak problem like renderd (I was uncertain whether it is renderd or mapnik, but it clearly seems to be renderd). Currently, the configuration resides in /etc/tirex/ over multple files (one main tirex.conf and one config per map layer). This differrent configuration structure means mod_tile does not understand it and its LoadTileConfigFile directive is still /usr/local/etc/renderd.conf. Quite clumsy, as any edits to renderable layers need to be made in two places, but it works.

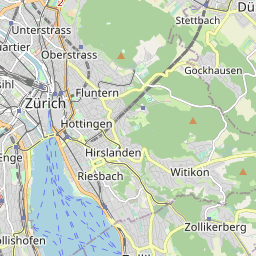

Layer /plain/

The standard OSM map layer. The stylesheet is generated by cartocss and located at ~/src/openstreetmap-carto/mapnik_plain.xml.

Planned is a change to merge/unmerge it with the layer plain_overlay: replace the layer plain with plain_base and to then view a plain map, show plain_base+plain_overlay. This means rewriting the xml-transforming script /home/user/flip_colours_to_transparent.py that generates plain_overlay.xml from plain.xml to actually parse the xml and split it into plain_overlay.xml + plain_base.xml. This way, the mostly taxing rendering of line and point features, and texts is not happening twice in plain and plain_overlay.

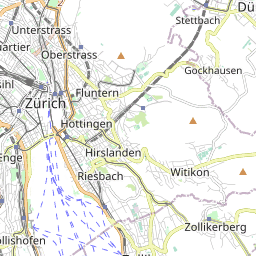

Layer /plain_overlay/

Modified /plain/ layer to change colours of aerial elements (elements that have area, not point- or linelike) to "transparent", to make laying it over a second "base" layer, satellite imagery for example, possible. The Stylesheet is generated by $ python3 flip_colours_to_transparent.py {mapnik_plain}.xml > {mapnik_plain-overlay}.xml, where the python script only replaces a few of the <PolygonSymbolizer> fill colour attributes by fill="transparent". This works well, also supported by the fact that the rendered raster tiles are in .png format which supports transparent colour. See above for planned change.

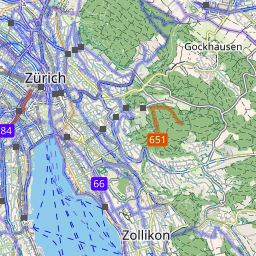

Layer /cyclosm/

Cycling-optimized map layer, the database schema is widened to contain all necessary data for both /plain*/ and /cyclosm/, see Reconciliation_of_database_schemas_for_rendering_of_two_layer_types. The xml stylesheet is generated in the working directory ~/src from the repo cyclosm-cartocss-style where the node module kosmtik is available, though only executable with a statically installed lts-version of nodejs in /root/(which has been made world-readable as a consequence).

$ export PATH="/root/node-v16.14.1-linux-x64/bin:$PATH"

$ node_modules/kosmtik/index.js export cyclosm-cartocss-style/project.mml --output cyclosm-cartocss-style/mapnik.xmlThe style does support a few settings for rendering, specified in cyclosm-cartocss-style/localconfig.js but changing the database name did not work, so to replace the default database name osm with gis:

$ sed -e 's_name="dbname"><!\[CDATA\[osm\]\]>_name="dbname"><!\[CDATA\[gis\]\]>_g' cyclosm-cartocss-style/mapnik.xml > cyclosm-cartocss-style/cyclosm-dbname.xmlThen, also edit the layer column usage as in Reconciliation_of_database_schemas_for_rendering_of_two_layer_types:

$ cp cyclosm-cartocss-style/cyclosm-dbname.xml cyclosm-cartocss-style/cyclosm-dbname-layer.xml

$ vim cyclosm-cartocss-style/cyclosm-dbname-layer.xmlLayer /contours/

Display contour lines of the same altitude. Similarly to #Layer_/plain_overlay/, background color is transparent to allow contour lines being used ontop of another map. The completely static data is imported once into the table contours by:

$ gdal_contour -i 10 -snodata 32767 -a height {in}.tif {out}.tif.shp

$ shp2pgsql -a -s 4326 -g way {shapefile}.shp contours|psql -d gisNote that we set the SRID to 4326 (lat/lon units projection) instead of leaving it unset. Most guidance on this is from the osm wiki page for contours. The ~/contours.xml also comes from there. The final shell loop with progress reports is:

$ { t=$(ls -w1 /mnt/18a/raster/SRTM_GL3_srtm/*.shp|wc -l);for i in /mnt/18a/raster/SRTM_GL3_srtm/*.shp;do printf '\033[2K\r%s\n' "$((c++))/$t $i">&2;shp2pgsql -a -s 4326 -g way "$i" contours 2>stderr;done; }|grep -v 'ANALYZE'|dd status=progress|sudo lxc-attach -n osm -- sudo -u user -- psql -d gis > db_import.logNote the removal of ANALYZE "contours" statements after every shapefile. ANALYZE was run once at the end, allowing a total speedup from 3MB/s (and declining as import progresses) to 12MB/s (and increasing to 18MB/s at the end) as reported by dd. For considerably speeding up rendering, the shp2pgsql -I create index option could be used, but instead we want, like for ANALYSE, to only create the index once at the end because it is an expensive operation (another approach would be to create the index at the start and let the database build it up while importing, though 18MB/s would probably not be reached. CONCURRENTLY would not be possible):

CREATE INDEX CONCURRENTLY IF NOT EXISTS contours_way ON contours USING GIST(way);We can also make use of this index for the height:

CREATE INDEX CONCURRENTLY IF NOT EXISTS contours_expr_height ON contours((height%10),(height%50),(height%100));A cluster could increase database performance by a little bit. If we have the time and some available RAM, this could take up to a few hours.

SET statement_timeout=0;

SET maintenance_work_mem='5GB';

CLUSTER VERBOSE contours USING contours_way;In total, postgres reports that the contours table uses 223 GB. This data does not update so nothing more is needed for the future, except maybe instruct the rendering daemon to never re-render any cached /contours/ tiles (currently it will re-render them whenever they expire).

apache2

The apache module mod_tile on Lada reads a renderd-syntax config file at /mnt/dbp/modtile.confand serves tiles on the configured urls /maps/{layer_name}/{z}/{x}/{y}.png. It requests them from the rendering daemon on Osm, through the socket /mnt/dbp/modtile.sock if they are not present in the cache /mnt/dbp/tiles/{layer_name}.

Nominatim

API for processing search queries on the database. It uses a fundamentally different database schema than rendering but some tables hold the same data.

A working prototype implementation is currently on a second database on port 5431, it contains data for switzerland only and works for rendering and searching.

This prototype was only possible since the end of 2022 when the nominatim project added experimental support for the osm2pgsql flex output style. It is a .lua script given to osm2pgsql that defines the database tables and three functions, osm2pgsql.process_node, osm2pgsql.process_way and osm2pgsql.process_relation. Those are then run for every osm object to be imported and add them to the database tables. Because the rendering mode also has experimental support for the flex output, the logic goes that one could simply redefine each of the process_* functions to run the two versions, one from rendering and one from nominatim, to import a union of all data into a single database. A few complications come up, but that sitll is the gist of it :

- double-import into the

planet_osm_waysandplanet_osm_relstables - rendering's

flexis quite experimental, need to calculate:area()for all ways and relations because it'sNULLfor now - order of nominatim then rendering or vice versa seems to do weird bugs -> make a deepcopy of input object

The search function is available through the search bar on Lada#Search_features and also returns geojson outlines of the search results.

TODO: Planet reimport

A planned database upgrade would provide search data for the entire planet and unite the rendering data too. Such a breaking change should be made when the following conditions are fulfilled:

- FILESYSTEM: approximately 1.7TB available space (does not account for compression; currently 950GB compresses to 750GB+100GB/weekly updates with zstd-8)

- FILESYSTEM: approx. double, so 3.4TB space available during import; osm2pgsql clusters imported tables by geometry by re-copying them. Again with compression this could be 2.7TB

- ZFS: change compression approach: zstd-8 is great for "low" disk usage. instead primarily optimize for low query/rendering times and only as a second priority look at disk usage. This means a zstd negative number most probably (in zfs expressed as zstd-fast-x <=> zstd negative x compression), where compression speed is prioritized over compression "thoroughness"

- ZFS: separate postgres/WAL and postgres/data datasets see documentation.

- POSTGRES: postgresql>=16, with postgis,

SELECT postgis_full_version();: - POSTGRES: geos>3.9

GEOS="3.12.1-CAPI-1.18.1" (compiled against GEOS 3.8.0)for ST_MaximumInscribedCircle in get_lakeline instead of ST_PointOnSurface - ZFS+POSTGRES: settings recommended from here and here.

- POSTGRES:

initdb --no-locale -E=UTF8 -n -N -D /db/pgdb1disable pgsql compression and disable pgsql checksumming: zfs does that job - POSTGRES: pg_repack add extension

- OS: move OS to debian 12 or newer

- OSM: make sure to CLUSTER BY way (automatically done by osm2pgsql, but check zfs passes that through down to disk ?)

- OSM: try importing some changefiles, osm2pgsql>=1.8.2 should have a fast algorithm

- OSM: try importing some changefiles on the union database

- OSM: osm2pgsql flex support ? [optimized middle database import]

- OSM: osm2pgsql flex: compatible.lua for rendering: not experimental anymore...?

- OSM: osm2pgsql configure tile expiry

- NOMINATIM: nominatim uses osm2pgsql new database schema: jsonb storage instead of hstore [should be more efficient]

Data updates

/home/user/osmimport.py will import any (potentially merged together before import) .osc.gz files in /mnt/dbp/maps/tmp in alphabetical ascending order and if they imported successfully, remove them. See Perun#/home/user/osmdl.py which downloads the update files into /mnt/dbp/maps/tmp/.