2024-12-23

[public] 39.7K views, 10.5K likes, dislikes audio only

Take your personal data back with Incogni! Use code WELCHLABS at the link below and get 60% off an annual plan: http://incogni.com/welchlabs

Welch Labs Imaginary Numbers Book!

https://www.welchlabs.com/resources/imaginary-numbers-book

Welch Labs Posters:https://www.welchlabs.com/resources

Special Thanks to Patrons https://www.patreon.com/welchlabs

Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman

My Gemma walkthrough notebook: https://colab.research.google.com/drive/1Y68yNr5TcHr4G5RJ0QHZhKkDe55AUkVj?usp=sharing

Most animations made with Manim: https://github.com/3b1b/manim

References and Further Reading

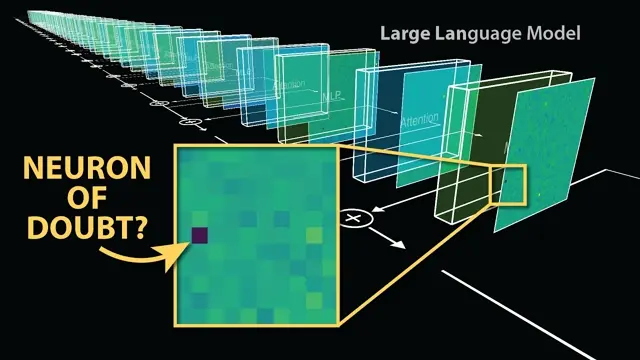

Chris Olah’s original “Dark Matter of Neural Networks” post: https://transformer-circuits.pub/2024/july-update/index.html#dark-matter

Great recent interview with Chris Olah: https://www.youtube.com/watch?v=ugvHCXCOmm4

Gemma Scope: https://arxiv.org/pdf/2408.05147

Experiment with SAEs yourself here! https://www.neuronpedia.org/

Relevant work from the Anthropic team:

https://transformer-circuits.pub/2022/toy_model/index.html

https://transformer-circuits.pub/2023/monosemantic-features

https://transformer-circuits.pub/2024/scaling-monosemanticity/

Excellent intro Mechanistic Interpretability: https://arena3-chapter1-transformer-interp.streamlit.app/%5B1.2%5D_Intro_to_Mech_Interp

Neel Nanda’s Mechanistic Interpretability Explainer: https://dynalist.io/d/n2ZWtnoYHrU1s4vnFSAQ519J

Transformer Lens: https://github.com/TransformerLensOrg/TransformerLens

SAE Lens: https://jbloomaus.github.io/SAELens/

Technical Notes

1. There are more advanced and more meaningful ways to map mid layer vectors to outputs, see: https://arxiv.org/pdf/2303.08112, https://neuralblog.github.io/logit-prisms/, https://www.lesswrong.com/posts/AcKRB8wDpdaN6v6ru/interpreting-gpt-the-logit-lens

2. The 6x2304 matrix is actually 7x2304, we’re ignoring the /bos token.

3. Gemma also includes positional embeddings and lots and lots of normalization layers, which we didn’t really cover

4. I’m conflating tokens and words sometimes, in this example each word is a token, so we don’t have to worry about it too much

5. The “_” characters represent spaces in the token strings