2025-05-09

[public] 96.1K views, 17.9K likes, dislikes audio only

4KTake your personal data back with Incogni! Use code WELCHLABS and get 60% off an annual plan: http://incogni.com/welchlabs

Loss Landscape Posters! 21:23

https://www.welchlabs.com/resources/loss-landscape-poster-17x19

https://www.welchlabs.com/resources/loss-landscape-poster-digital-download

Poster and Book Bundle

https://www.welchlabs.com/resources/loss-landscape-bundle-w-imaginary-numbers-book

Special Matte Black Edition Poster

https://www.welchlabs.com/resources/loss-landscape-poster-17x22-matte-black-special-edition

Welch Labs Book

https://www.welchlabs.com/resources/imaginary-numbers-book

Sections

0:00 - Intro

1:18 - How Incogni gets me more focus time

3:01 - What are we measuring again?

6:18 - How to make our loss go down?

7:32 - Tuning one parameter

9:11 - Tuning two parameters together

11:01 - Gradient descent

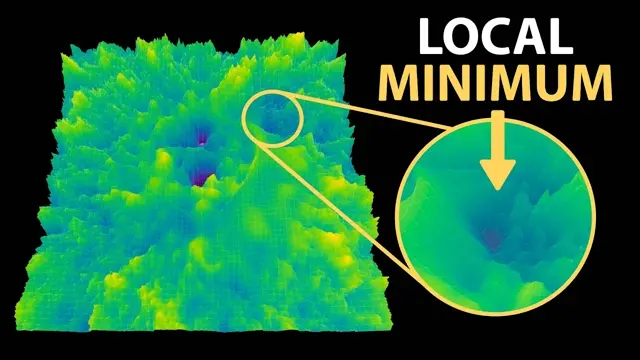

13:18 - Visualizing high dimensional surfaces

15:10 - Loss Landscapes

16:55 - Wormholes!

17:55 - Wikitext

18:55 - But where do the wormholes come from?

20:00 - Why local minima are not a problem

21:23 - Posters

Special Thanks to Patrons https://www.patreon.com/welchlabs

Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman, Jake Ehrlich, Mitch Jacobs

References

Li et al: Visualizing the Loss Landscape of Neural Nets. https://arxiv.org/abs/1712.09913

Talking Nets: An Oral History of Neural Networks. (2000). United Kingdom: MIT Press. Hinton quote is on p376.

Goodfellow, I., Bengio, Y., Courville, A. (2016). Deep Learning. United Kingdom: MIT Press.

Prince, S. J. (2023). Understanding Deep Learning. United Kingdom: MIT Press.

Manim Animations: https://github.com/stephencwelch/manim_videos

Premium Beat IDs

MWROXNAY0SPXCMBS

/youtube/video/NrO20Jb-hy0?t=78

/youtube/video/NrO20Jb-hy0?t=181

/youtube/video/NrO20Jb-hy0?t=378

/youtube/video/NrO20Jb-hy0?t=452

/youtube/video/NrO20Jb-hy0?t=551

/youtube/video/NrO20Jb-hy0?t=661

/youtube/video/NrO20Jb-hy0?t=798

/youtube/video/NrO20Jb-hy0?t=910

/youtube/video/NrO20Jb-hy0?t=1015

/youtube/video/NrO20Jb-hy0?t=1075

/youtube/video/NrO20Jb-hy0?t=1135

/youtube/video/NrO20Jb-hy0?t=1200