2017-11-03

[public] 2.52M views, 94.9K likes, 385 dislikes audio only

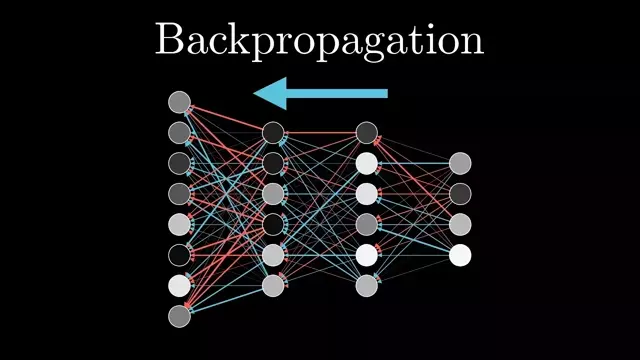

What's actually happening to a neural network as it learns?

Help fund future projects: https://www.patreon.com/3blue1brown

An equally valuable form of support is to simply share some of the videos.

Special thanks to these supporters: http://3b1b.co/nn3-thanks

Written/interactive form of this series: https://www.3blue1brown.com/topics/neural-networks

And by CrowdFlower: http://3b1b.co/crowdflower

Home page: https://www.3blue1brown.com/

The following video is sort of an appendix to this one. The main goal with the follow-on video is to show the connection between the visual walkthrough here, and the representation of these "nudges" in terms of partial derivatives that you will find when reading about backpropagation in other resources, like Michael Nielsen's book or Chis Olah's blog.

Video timeline:

0:00 - Introduction

0:23 - Recap

3:07 - Intuitive walkthrough example

9:33 - Stochastic gradient descent

12:28 - Final words

Thanks to these viewers for their contributions to translations

Звуковая дорожка на русском языке: Влад Бурмистров.

Italian: @teobucci

Vietnamese: CyanGuy111

/youtube/video/Ilg3gGewQ5U?t=0

/youtube/video/tIeHLnjs5U8

http://www.3blue1brown.com/crowdflower

http://www.patreon.com/3blue1brown

/youtube/video/Ilg3gGewQ5U?t=187

/youtube/video/Ilg3gGewQ5U?t=573

/youtube/video/Ilg3gGewQ5U?t=748

/youtube/channel/UCYO_jab_esuFRV4b17AJtAw

/youtube/video/fNk_zzaMoSs

/youtube/video/tIeHLnjs5U8

https://www.patreon.com/3blue1brown