2021-07-30

[public] 74.4K views, 10.3K likes, 35.0 dislikes audio only

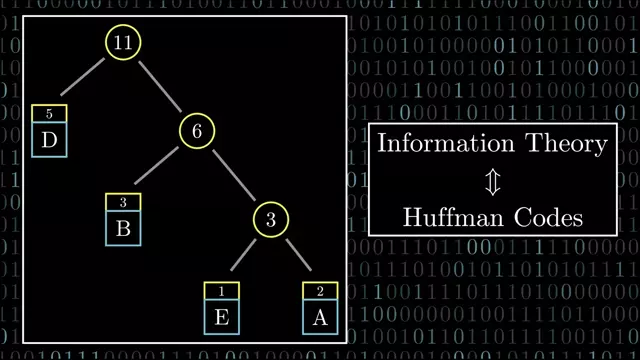

Huffman Codes are one of the most important discoveries in the field of data compression. When you first see them, they almost feel obvious in hindsight, mainly due to how simple and elegant the algorithm ends up being. But there's an underlying story of how they were discovered by Huffman and how he built the idea from early ideas in information theory that is often missed. This video is all about how information theory inspired the first algorithms in data compression, which later provided the groundwork for Huffman's landmark discovery.

0:00 Intro

2:02 Modeling Data Compression Problems

6:20 Measuring Information

8:14 Self-Information and Entropy

11:03 The Connection between Entropy and Compression

16:47 Shannon-Fano Coding

19:52 Huffman's Improvement

24:10 Huffman Coding Examples

26:10 Huffman Coding Implementation

27:08 Recap

This video is supported by a community of Patreons

Special Thanks to the following Patreons:

Burt Humburg

Michael Nawenstein

Richard Wells

Sebastian Gamboa

Zac Landis

Corrections:

At 9:34, the entropy was calculated with log base 10 instead of the expected log base 2,. The correct values should be H(P) = 1.49 bits and H(P) = 0.47 bits.

At 16:23, all logarithms should be negated, I totally forgot about the negative sign.

At 27:24, I should have said the least likely symbols should have the *longest encoding.

This video wouldn't be possible without the open source library manim created by 3blue1brown: https://github.com/3b1b/manim

Here is link to the repository that contains the code used to generate the animations in this video: https://github.com/nipunramk/Reducible

Music attributions:

Midsummer Sky by Kevin MacLeod is licensed under a Creative Commons Attribution 4.0 license. https://creativecommons.org/licenses/...

Source: http://incompetech.com/music/royalty-...

Artist: http://incompetech.com/

Luminous Rain by Kevin MacLeod is licensed under a Creative Commons Attribution 4.0 license. https://creativecommons.org/licenses/...

Source: http://incompetech.com/music/royalty-...

Artist: http://incompetech.com/

This video put together a variety of great resources on information theory, data compression, Shannon-Fano codes, and Huffman codes. Here are some links that I found most helpful while doing research.

A great resource on Shannon's source coding theorem (with the proof): https://mbernste.github.io/posts/sourcecoding/

Huffman's original paper: http://compression.ru/download/articles/huff/huffman_1952_minimum-redundancy-codes.pdf

Great chapter on Information Theory motivating various data compression ideas: https://www.princeton.edu/~cuff/ele201/kulkarni_text/information.pdf

Great book for further reading: Data Compression by Khalid Sayood

Algorithmic perspective on Huffman Codes: Section 5.2 of Algorithms by Papadimitriou et al

More elaboration on discovery of Huffman Codes: https://www.maa.org/sites/default/files/images/upload_library/46/Pengelley_projects/Project-14/Huffman.pdf

A really nice resource on data compression and information theory: http://www.ece.virginia.edu/~ffh8x/moi/compression.html

Implementation of Huffman Codes: https://www.techiedelight.com/huffman-coding/

/youtube/video/B3y0RsVCyrw?t=122

/youtube/video/B3y0RsVCyrw?t=380

/youtube/video/B3y0RsVCyrw?t=494

/youtube/video/B3y0RsVCyrw?t=663

/youtube/video/B3y0RsVCyrw?t=1007

/youtube/video/B3y0RsVCyrw?t=1192

/youtube/video/B3y0RsVCyrw?t=1450

/youtube/video/B3y0RsVCyrw?t=1570

https://www.patreon.com/reducible

/youtube/video/yYEMxqreA10

/youtube/channel/UCK8XIGR5kRidIw2fWqwyHRA