2025-10-02

[public] 30.6K views, 2.30K likes, dislikes audio only

Take action to prevent the AI apocalypse at: https://campaign.controlai.com/

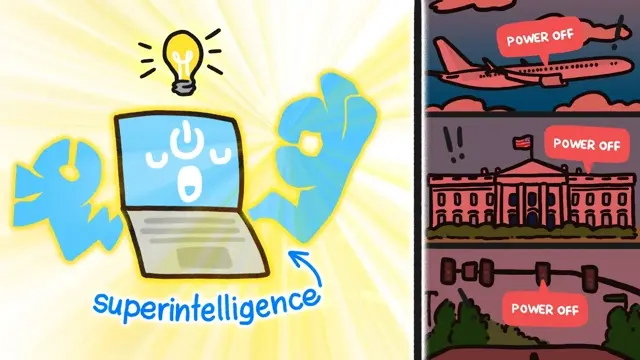

Ok so “misaligned AI” is dangerous. But if it does decide to kill us, what SPECIFICALLY could it do?

LEARN MORE

**************

To learn more about this topic, start your googling with these keywords:

- Superintelligence: Any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest.

- Misaligned AI: Artificial intelligence systems whose goals, behaviors, or outcomes do not match human intentions or ethical principles.

- AI Value Alignment: The process of designing and developing Artificial Intelligence systems so their goals and actions are consistent with beneficial human values and ethical principles.

SUPPORT MINUTEEARTH

**************************

If you like what we do, you can help us!:

- Become our patron: https://patreon.com/MinuteEarth

- Our merch: http://dftba.com/minuteearth

- Our book: https://minuteearth.com/books

- Sign up to our newsletter: http://news.minuteearth.com

- Share this video with your friends and family

- Leave us a comment (we read them!)

CREDITS

*********

David Goldenberg | Script Writer, Narrator and Director

Arcadi Garcia i Rius | Storyboard Artist

Sarah Berman | Illustration, Video Editing and Animation

Nathaniel Schroeder | Music

MinuteEarth is produced by Neptune Studios LLC

OUR STAFF

************

Lizah van der Aart • Sarah Berman • Cameron Duke

Arcadi Garcia i Rius • David Goldenberg • Melissa Hayes

Henry Reich • Ever Salazar • Leonardo Souza • Kate Yoshida

OUR LINKS

************

Youtube | https://youtube.com/MinuteEarth

TikTok | https://tiktok.com/@minuteearth

Twitter | https://twitter.com/MinuteEarth

Instagram | https://instagram.com/minute_earth

Facebook | https://facebook.com/Minuteearth

Website | https://minuteearth.com

Apple Podcasts| https://podcasts.apple.com/us/podcast/minuteearth/id649211176

REFERENCES

**************

Park, P. S., et al. (2024). AI deception: A survey of examples, risks, and potential solutions. Patterns (New York, N.Y.), 5(5), 100988. https://doi.org/10.1016/j.patter.2024.100988

Vermeer, M. (2025) Could AI Really Kill Off Humans? Scientific American. https://www.scientificamerican.com/article/could-ai-really-kill-off-humans/

de Lima, R. C., et al. (2024). Artificial intelligence challenges in the face of biological threats: emerging catastrophic risks for public health. Frontiers in Artificial Intelligence. Vol. 7. https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2024.1382356

Carlsmith, J. (2022). Is Power-Seeking AI an Existential Risk? arXiv. https://arxiv.org/abs/2206.13353

Bucknall, B., Dori-hacohen, S. (2023). Current and Near-Term AI as a Potential Existential Risk Factor. arXiv. https://arxiv.org/abs/2209.10604

Bengio, Y., et al. (2024). Managing extreme AI risks amid rapid progress. Science (New York, N.Y.), 384(6698), 842–845. https://doi.org/10.1126/science.adn0117

Kasirzadeh, A. (2024). Two Types of AI Existential Risk: Decisive and Accumulative. ArXiv, abs/2401.07836. https://arxiv.org/pdf/2401.07836.pdf.

Lavazza, Andrea & Vilaça, Murilo. (2024). Human Extinction and AI: What We Can Learn from the Ultimate Threat. Philosophy & Technology. https://link.springer.com/article/10.1007/s13347-024-00706-2

https://patreon.com/minuteearth

/youtube/video/x_XcChy9U5I