2024-09-13

[public] 2.20K views, 48.7K likes, dislikes audio only

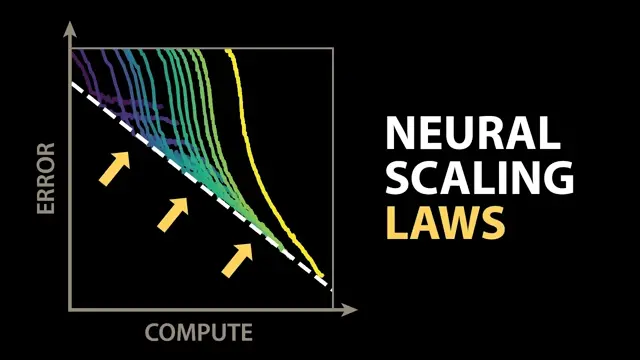

Have we discovered an ideal gas law for AI? Head to https://brilliant.org/WelchLabs/ to try Brilliant for free for 30 days and get 20% off an annual premium subscription.

Welch Labs Imaginary Numbers Book!

https://www.welchlabs.com/resources/imaginary-numbers-book

Welch Labs Posters: https://www.welchlabs.com/resources

Support Welch Labs on Patreon! https://www.patreon.com/welchlabs

Special thanks to Patrons: Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin

Learn more about WelchLabs! https://www.welchlabs.com

TikTok: https://www.tiktok.com/@welchlabs

Instagram: https://www.instagram.com/welchlabs

REFERENCES

A Neural Scaling Law from the Dimension of the Data Manifold: https://arxiv.org/pdf/2004.10802

First 2020 OpenAI Scaling Paper: https://arxiv.org/pdf/2001.08361

GPT-3 Paper: https://arxiv.org/pdf/2005.14165

Second 202 OpenAI Scaling Paper: https://arxiv.org/pdf/2010.14701

Google Deepmind “Chinchilla Scaling” Paper: https://arxiv.org/abs/2203.15556

Nice summary of Chinchilla Scaling: https://www.lesswrong.com/posts/6Fpvch8RR29qLEWNH/chinchilla-s-wild-implications

GPT-4 Technical Report: https://arxiv.org/pdf/2303.08774

Nice Neural Scaling Laws Summary: https://www.lesswrong.com/posts/Yt5wAXMc7D2zLpQqx/an-140-theoretical-models-that-predict-scaling-laws

Explaining Neural Scaling Laws: https://arxiv.org/pdf/2102.06701

High Cost of Training GPT-4: https://www.wired.com/story/openai-ceo-sam-altman-the-age-of-giant-ai-models-is-already-over/

Nvidia V100 FLOPs: https://lambdalabs.com/blog/demystifying-gpt-3

Nvidia V100 Original Price: [https://www.microway.com/hpc-tech-tips/nvidia-tesla-v100-price-analysis/#:~:text=Tesla GPU model,Key Points](https://www.microway.com/hpc-tech-tips/nvidia-tesla-v100-price-analysis/#:~:text=Tesla%20GPU%20model,Key%20Points)

Great paper on scaling up training infrastructure: https://arxiv.org/pdf/2104.04473

Eight Things to Know about LLMs: https://arxiv.org/abs/2304.00612

Emergent Properties of LLMs: https://arxiv.org/abs/2206.07682

Theoretical Motivation for Cross Entropy (Section 6.2): https://www.deeplearningbook.org/

Some papers that appear to pass the compute efficient frontier

https://arxiv.org/pdf/2206.14486

https://arxiv.org/abs/2210.11399

Leaked GPT-4 training info

https://patmcguinness.substack.com/p/gpt-4-details-revealed

https://www.semianalysis.com/p/gpt-4-architecture-infrastructure

https://epochai.org/blog/tracking-large-scale-ai-models