2025-03-05

[public] 93.0K views, 32.3K likes, dislikes audio only

4KThanks to KiwiCo for sponsoring today’s video! Go to https://www.kiwico.com/welchlabs and use code WELCHLABS for 50% off your first monthly club crate or for 20% off your first Panda Crate!

MLA/DeepSeek Poster at 17:12 (Free shipping for a limited time with code DEEPSEEK):

https://www.welchlabs.com/resources/mladeepseek-attention-poster-13x19

Limited edition MLA Poster and Signed Book:

https://www.welchlabs.com/resources/deepseek-bundle-mla-poster-and-signed-book-limited-run

Imaginary Numbers book is back in stock!

https://www.welchlabs.com/resources/imaginary-numbers-book

Special Thanks to Patrons https://www.patreon.com/c/welchlabs

Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman, Jake Ehrlich

References

DeepSeek-V2 paper: https://arxiv.org/pdf/2405.04434

DeepSeek-R1 paper: https://arxiv.org/abs/2501.12948

Great Article by Ege Erdil: https://epoch.ai/gradient-updates/how-has-deepseek-improved-the-transformer-architecture

GPT-2 Visualizaiton: https://github.com/TransformerLensOrg/TransformerLens

Manim Animations: https://github.com/stephencwelch/manim_videos

Technical Notes

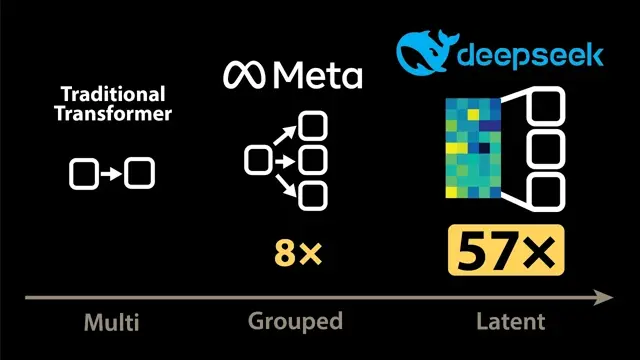

1. Note that DeepSeek-V2 paper claims a KV cache size reduction of 93.3%. They don’t exactly publish their methodology, but as far as I can tell it’s something likes this: start with Deepseek-v2 hyperparameters here: https://huggingface.co/deepseek-ai/DeepSeek-V2/blob/main/configuration_deepseek.py. num_hidden_layers=30, num_attention_heads=32, v_head_dim = 128. If DeepSeek-v2 was implemented with traditional MHA, then KV cache size would be 2*32*128*30*2=491,520 B/token. With MLA with a KV cache size of 576, we get a total cache size of 576*30=34,560 B/token. The percent reduction in KV cache size is then equal to (491,520-34,560)/492,520=92.8%. The numbers I present in this video follow the same approach but are for DeepSeek-v3/R1 architecture: https://huggingface.co/deepseek-ai/DeepSeek-V3/blob/main/config.json. num_hidden_layers=61, num_attention_heads=128, v_head_dim = 128. So traditional MHA cache would be 2*128*128*61*2 = 3,997,696 B/token. MLA reduces this to 576*61*2=70,272 B/token. Tor the DeepSeek-V3/R1 architecture, MLA reduces the KV cache size by a factor of 3,997,696/70,272 =56.9X.

2. I claim a couple times that MLA allows DeepSeek to generate tokens more than 6x faster than a vanilla transformer. The DeepSeek-V2 paper claims a slightly less than 6x throughput improvement with MLA, but since the V3/R1 architecture is heavier, we expect a larger lift, which is why i claim “more than 6x faster than a vanilla transformer” - in reality it’s probably significantly more than 6x for the V3/R1 architecture.

3. In all attention patterns and walkthroughs, we’re ignoring the |beginning of sentence| token. “The American flag is red, white, and” actually maps to 10 tokens if we include this starting token, and may attention patterns do assign high values to this token.

4. We’re ignoring bias terms matrix equations.

5. We’re ignoring positional embeddings. These are fascinating. See DeepSeek papers and ROPE.